Elon Musk on June 21 threw a bombshell on the X platform, claiming that Grok 3.5 (or Grok 4) with advanced reasoning capabilities will "rewrite the entire human knowledge corpus" and "add missing information, delete errors". Musk directly pointed out that existing large language models use "uncorrected data" and are full of "garbage", emphasizing that Grok has ten times the computing power of its predecessor and new features like DeepSearch and Beta voice interaction. Additionally, xAI has an exclusive partnership with Telegram, investing $300 million to push Grok to over a billion users, directly challenging GPT-4.

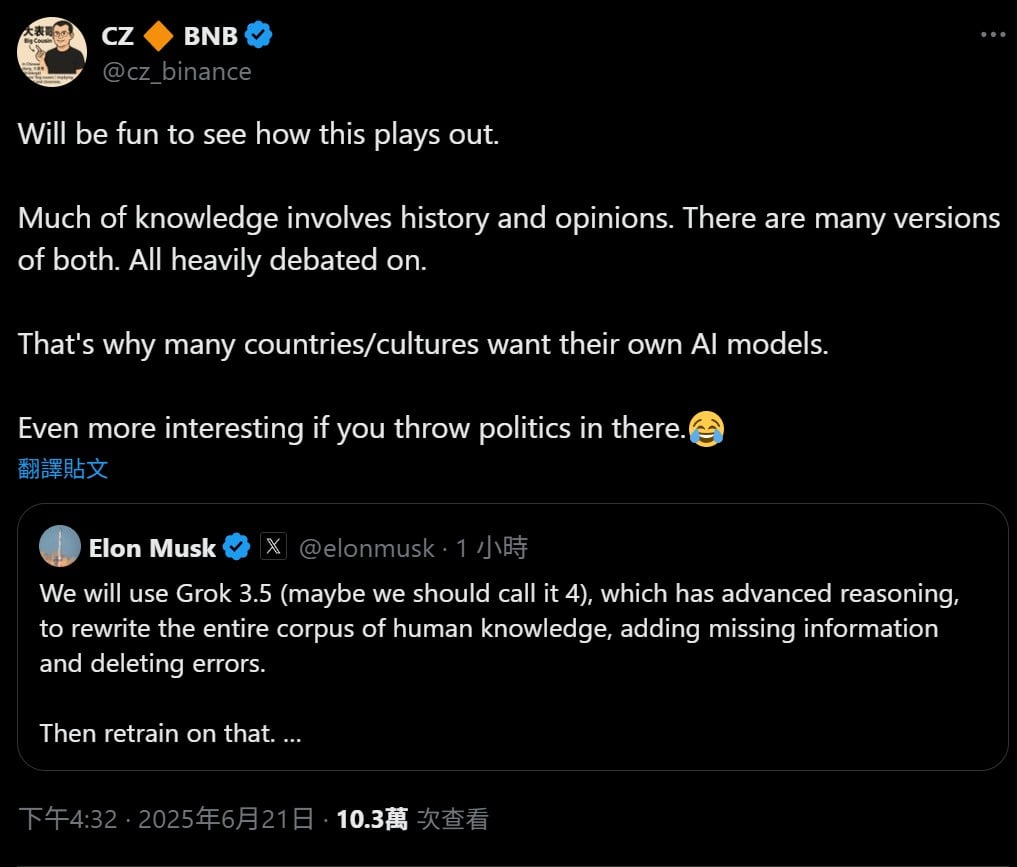

Grok 3.5 (perhaps we should call it 4), with advanced reasoning capabilities, will rewrite the entire human knowledge corpus, add missing information, and delete errors. Then retrain. There is too much garbage in any foundational model trained on uncorrected data.

The Double-Edged Effect of Unique Training Philosophy

It is understood that xAI extensively collected dialogues from imaginative scenarios like "zombie apocalypse survival" and "Mars colonization", allowing Grok to respond more like a real person, while integrating real-time social trends from the X platform to form a human-centric and timely knowledge base. However, Grok's market strategy adopts a somewhat "contrarian" or "right-wing" tone to highlight differentiation; extensive human annotation and red team testing can reduce inappropriate outputs but also introduce human biases into the model.

Therefore, CZ, the founder of Binance, pointed out the core contradiction, believing that knowledge bases involving history and subjective perspectives are inherently diverse, and a single AI attempting to "correct errors" is inevitably simplistic, which could create more social problems.

Binance's former CEO CZ just mentioned that involving historical and perspectives might be problematic:

Many knowledge points involve history and perspectives. Both have many versions. All of these have been fiercely debated. This is why many countries/cultures want to have their own AI models.

Recent research indicates that large language models may still reproduce copyright-protected content or amplify existing biases in conceptual representation, reflecting the tension between AI and social and legal frameworks.

Musk Illegally Obtaining Data for Training?

Media reports suggest that Musk's government efficiency department (DOGE) may be using a customized Grok without authorization to analyze US government data, raising concerns about confidential information leakage and fair competition. Grok's continuous self-update through user interactions enhances accuracy but makes regulation more challenging. As countries actively develop local models, the AI knowledge system will inevitably become more diverse, rather than being monopolized by a single platform.

From the bold statement of "rewriting knowledge" to questions about government applications, Grok's next steps not only test technology but also touch on society's imagination of truth, privacy, and ethics. How AI and humans will ultimately write the final answer remains to be verified by time.